Importing data from one database engine to another is often a pain. Getting the data out of MySQL is easy, but pushing it to SQL Server may be harder. The easiest way to achieve this is to use “SQL Server Import and Export Wizard” to directly query your MySQL Database, and push the data in your SQL Server.

Trying other techniques often leads to a waste of time. For example, the MySqlDump tool can generate a SQL script for your exported database structures and data. But running it against SQL Server using the SQLCmd command line tool will fail with errors like :

[code]Sqlcmd: Error: Scripting error.[/code]

The MySQL SQL syntax is not the same as the SQL Server one. Converting the script is possible if you only have a couple of records, impossible when your SQL files is 14 Gb big – like mine – and that no file editor deals with such huge files (rest peacefully notepad++).

Another tempting option could be to use an intermediate CSV file. Everybody loves CSV ! You generate your CSV file with a MySQL “SELECT INTO … OUTFILE … “ query like :

[code]

SELECT *

FROM myTable

INTO OUTFILE ‘c:\\myTable.csv’

CHARACTER SET ‘latin1’

FIELDS TERMINATED BY ‘;’

ENCLOSED BY ‘”‘

LINES TERMINATED BY ‘\r\n’;

[/code]

And then try to feed it to SQL Server. Except that CSV is not standardized… So, even after adjusting column types, CSV delimiters, etc. the SQL Server Import and Export Wizard will fail because of a datetime field incorrectly formatted, or because of the character set and code page used by strings. By default, the MySQL exported CSV file seems to be UTF-8, but even though the SQL Server import wizard supports it, you get import errors as soon as you have non US characters…

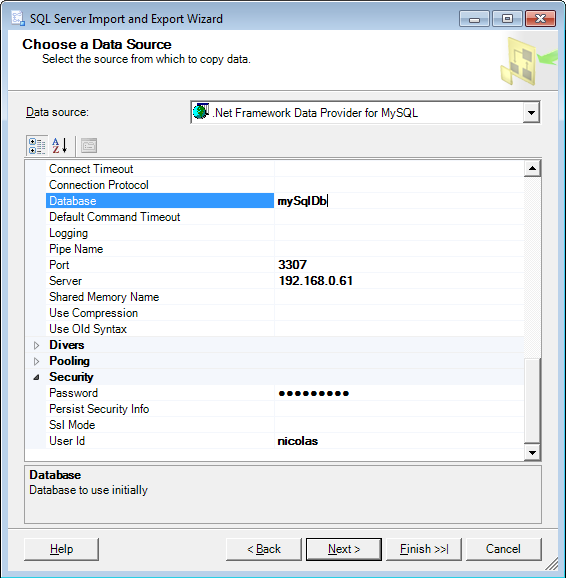

Eventually, the easiest solution is to use the “SQL Server Import and Export Wizard” with the .Net Framework Data Provider for MySQL :

Once your source database settings configured, the wizard will suggest a default mapping for the columns. You will probably keep it as is, and start the import, which will be done quickly and smoothly.

Président et fondateur de NeoLegal, développe des solutions logicielles qui facilitent le quotidien des professionnels du droit des sociétés.